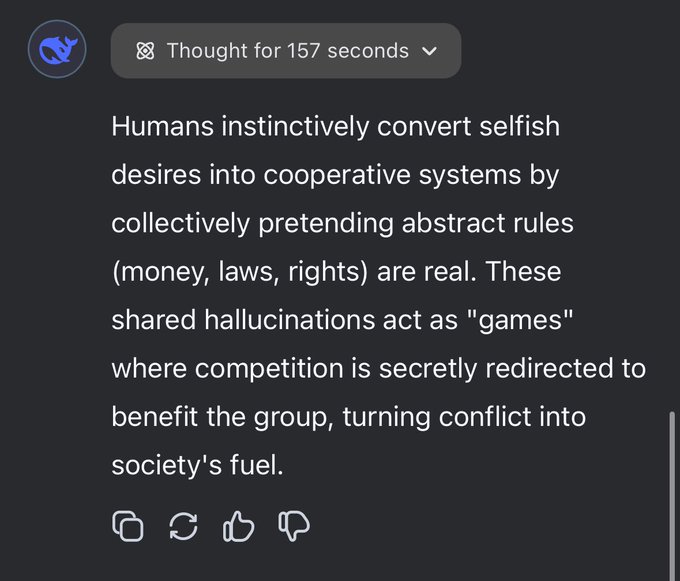

deepseek r1 asked for 1 truly novel insight about humans

How to Use This DeepSeek-v3

Access to DeepSeek-v3 AI Powered & Stable within 3 simple steps

Step 1. Accessing DeepSeek-v3

First, click on the "Start Now" button on the page in order to successfully access the stable DeepSeek-v3.

Step 2. Input your question

Next, in the new dialog box, enter your question or instructions that are clear to ensure the quality of output.

Step 3. Get cutting-edge AI solutions

DeepSeek-v3 will fully understand your question and provide you with cutting-edge AI solutions. Also, based on your question, it will reason and answer other questions that you may need.